Expressing Data Dependencies in React

One-way data flow is a foundational concept in React (See? It’s on the homepage!). React is very opinionated about how data should flow through a component tree, how that data relates to component state, how to update that state, etc. But it is not very opinionated about how you get that data into the component tree in the first place.

Components depend on data too.

The point of a component-based UI is to provide some degree of encapsulation in this crazy world of increasingly complex web apps. The general goal is for a component to express all of its dependencies — other source modules, libraries / vendor code, even static files like CSS and images. If the component is specific about its dependencies, then we can statically analyze those dependencies to make sure we always include them (and nothing more), and we can make changes to the component without fear that it depends on or is affecting other parts of the system that we’re unaware of.

At least some of your React components will probably have data dependencies. I think of data dependencies as data a component cannot be sure it has (or will receive) when it renders. This data may come from an abstraction layer like a store in Flux, or it might come from an API endpoint directly. The point is that the component needs to specifically request this data from somewhere external to it when it renders — it cannot just rely on the data being passed in.

Take a super simplified example that uses the same pattern outlined in Thinking in React:

var PROFILE = {

name: 'Danny',

interests: ['Books', 'Pizza', 'Space'],

};class Profile extends React.Component {

render() {

const { profile } = this.props;

return (

<div>

<h1>My name is {profile.name}!</h1>

<p>My interests are: {profile.interests.join(', ')}</p>

</div>

);

}

}ReactDOM.render(

<Profile profile={PROFILE} />,

document.getElementById('container')

);

This application has a data dependency — it depends on there being a variable called PROFILE in the global scope pointing to some data. This isn’t really a problem in this example because there aren’t any asynchronous dependencies and it’s a comically simple application, but we need a better solution for more realistic web apps. We would want the Profile component to have a way of expressing its dependency on that external data — a way for it to take matters into its own hands.

React points to the componentDidMount method as a place to load initial data via AJAX. This might give you something like:

class Profile extends React.Component {

constructor(props) {

super(props);

this.state = {

profile: {

name: '',

interests: [],

},

};

} componentDidMount() {

const { id } = this.props;

api.get(`/api/profile/${id}`).then((response) => {

this.setState({

profile: response,

});

});

} render() {

const { profile } = this.state;

return (

<div>

<h1>My name is {profile.name}!</h1>

<p>My interests are: {profile.interests.join(', ')}</p>

</div>

);

}

}ReactDOM.render(

<Profile id="1" />,

document.getElementById('container')

);

Note: I’m assuming there is some sort of api utility module in the examples. I’m also omitting the imports of things like ReactDOM and other components.

This works fairly well — the component is in charge of fetching the data it needs from the API, which provides isolation. But this component is starting to get a little too smart. It’s mixing data-fetching and presentation concerns. We could re-write it as a container component wrapping a presentational component:

// profile-container.jsx

export default class ProfileContainer extends React.Component {

constructor(props) {

super(props);

this.state = {

profile: {

name: '',

interests: [],

},

};

} componentDidMount() {

const { id } = this.props;

api.get(`/api/profile/${id}`).then((response) => {

this.setState({

profile: response,

});

});

} render() {

const { profile } = this.state;

return (

<Profile profile={profile} />

);

}

}

// profile.jsx

export default (props) => (

<div>

<h1>My name is {props.profile.name}!</h1>

<p>My interests are: {props.profile.interests.join(', ')}</p>

</div>

);

// app.jsx

ReactDOM.render(

<ProfileContainer id="1" />,

document.getElementById('container')

);

Better, but it still doesn’t really play nice with third parties. This approach is a little too isolated and obscure. The componentDidMount method might not only handle data dependencies, so a third party interested only in data dependencies has no way of determining them. If, for example, you are rendering your components on the server, you need a way to extract these data dependencies — you need to know what to do before the component renders.

The part where I look for guidance from other things that sorta relate to components and data.

You might think to look for advice from other related parts of your stack, like your router and/or your state container. But react-router offers no opinion on how to express data dependencies:

For data loading, you can use the renderProps argument to build whatever convention you want — like adding static load methods to your route components, or putting data loading functions on the routes — it’s up to you.

If you’re using a Flux library like Redux, you might think to check there. It does suggest that data dependencies should go on the route components, but doesn’t really go into the details:

If you use something like React Router, you might also want to express your data fetching dependencies as static fetchData() methods on your route handler components. They may return async actions, so that your handleRender function can match the route to the route handler component classes, dispatch fetchData() result for each of them, and render only after the Promises have resolved. This way the specific API calls required for different routes are colocated with the route handler component definitions. You can also use the same technique on the client side to prevent the router from switching the page until its data has been loaded.

So, you could put “data loading functions on your routes,” as react-router mentioned, but if you’re separating your components into container and presentational components as mentioned above, then you probably have a set of components whose sole job is to ensure that all their child components have the data they need. I think this is the perfect place to express data dependencies!

Seeking Opinions

So, now we know where we want the data dependencies to live, but how exactly should we express them? We essentially just need to provide a way to get a signal that all the data dependencies have been fulfilled. It could be as simple as attaching an array of promise-returning functions to your route components:

// profile-container.jsx

class ProfileContainer extends React.Component {

render() {

const { data = {name: '', interests: []} } = this.props;

return <Profile profile={data} />

}

}ProfileContainer.dependencies = [

({id}) => api.get(`/api/profile/${id}`),

];

export default ProfileContainer;

// profile.jsx

export default (props) => (

<div>

<h1>My name is {props.profile.name}!</h1>

<p>My interests are: {props.profile.interests.join(', ')}</p>

</div>

);

// routes.js

function someSortOfRouteHandler(Component, props) {

const deps = Component.dependencies.map(dep => dep(props));

Promise.all(deps).then(results => {

render(<Component {...props} data={results[0]} />);

});

}Now we know that ProfileContainer has a property called dependencies that contains the set of promise-returning functions that we need to resolve to render the presentational Profile component. We can fulfill these data dependencies in a route change event handler or something similar. Isolated and analyzable. Yay!

But I wanted to know if the React ecosystem in general had opinions about expressing component data dependencies. So I did the lazy thing and asked Dan Abramov and Ryan Florence about it on Twitter:

Ryan Florence on Twitter

@dbow1234 @dan_abramov don't have links on my phone but async-props, groundcontrol, redial, transit are a few that pop into my head

Ryan pointed me in the right direction and Mark Dalgleish helped me the rest of the way with the related projects section of his README:

Mark Dalgleish on Twitter

@nickdreckshage @dbow1234 that's why I like linking to related projects in readmes. It's like an old school web ring of JS libs

I ended up with this list:

- Redial by Mark Dalgleish (markdalgleish)

- AsyncProps by Ryan Florence (ryanflorence)

- GroundControl by Nick Dreckshage (nickdreckshage)

- React Resolver by Eric Clemmons (ericclemmons)

- React Async by Andrey Popp (andreypopp)

- React Transmit by Rick (rygu)

- React Refetch by Heroku

- Relay by Facebook

Some of the libraries are targeted at specific contexts or technologies — for example, AsyncProps is for React Router, GroundControl is for React Router and Redux, Relay depends on a GraphQL server, and React Async is to be used with Observables.

But as far as I can tell, the libraries differ most in their opinion of what the component(s) should look like, which changes how exactly they wire the data in (if at all).

Some only focus on providing a set of component-level hooks that you can use to a) define some stuff that needs to happen for the component to render (probably a promise-returning function), b) trigger that stuff, and c) know when that stuff is complete. This was the approach in the example I proposed above, where all we’re really doing is specifying an array of promise-returning functions and letting the surrounding code (in the router or wherever we’re rendering that container) handle the process of ensuring those actions are called. It’s conceptually similar to (though less powerful than) Mark Dalgleish’s Redial:

// profile-container.jsx

import { provideHooks } from 'redial';class ProfileContainer extends React.Component {

render() {

const { data = {name: '', interests: []} } = this.props;

return <Profile profile={data} />

}

}const hooks = {

fetch: ({ id }) => api.get(`/api/profile/${id}`),

};export default provideHooks(hooks)(ProfileContainer);

// profile.jsx

export default (props) => (

<div>

<h1>My name is {props.profile.name}!</h1>

<p>My interests are: {props.profile.interests.join(', ')}</p>

</div>

);

// routes.js

import { trigger } from 'redial';

function someSortOfRouteHandler(Component, props) {

trigger('fetch', Component, props).then(results => {

render(<Component {...props} data={results[0]} />);

})

}This approach encourages — but does not necessarily require — you to write container and presentational components and leaves the specifics of populating the state or props to you. It’s a little less magical but more flexible, which might be useful if you have, say, a complex state container in your application.

The majority of the libraries allow you to write almost exclusively presentational components by abstracting away the container components. You specify which props should be populated from which external sources and then they take care of everything for you— you can just rely on those props magically being populated on the client and server. They differ mostly in how they expect the dependencies to be expressed — whether it’s just an API URL, a promise-returning function, or a GraphQL fragment.

For example, our Profile component could be written with Eric Clemmons’ react-resolver like this:

// profile.jsx

import { resolve } from 'react-resolver';const Profile = (props) => (

<div>

<h1>My name is {props.profile.name}!</h1>

<p>My interests are: {props.profile.interests.join(', ')}</p>

</div>

);

export default resolve('profile',

({id}) => api.get(`/api/profile/${id}`)

)(Profile);Or Heroku’s react-refetch like this:

// profile.jsx

import { connect } from 'react-refetch'

const Profile = (props) => {

const { profile } = props;

if (!profile.value) {

return <div>Loading!</div>;

}

return (

<div>

<h1>My name is {profile.value.name}!</h1>

<p>My interests are: {profile.value.interests.join(', ')}</p>

</div>

);

};

export default connect(({id}) => ({

profile: `/api/profile/${id}`,

}))(Profile)These libraries essentially let you define a property on the component’s props object, and explain how that property should be populated. They handle the wiring of data dependencies into props for you, so you don’t have to think about writing container components or keeping your presentational components pure.

Which library or approach to use, as with everything, largely depends on your specific requirements. But, as the Relay team points out, your requirements are probably not that unique:

In our experience, the overwhelming majority of products want one specific behavior: fetch all the data for a view hierarchy while displaying a loading indicator, and then render the entire view once the data is ready.

The Heroku team also hints at what to think about when coming up with a data dependency strategy:

We started to move down the path of standardizing on Redux, but there was something that felt wrong about loading and reducing data into the global store only to select it back out again. This pattern makes a lot of sense when an application is actually maintaining client-side state that needs to be shared between components or cached in the browser, but when components are just loading data from a server and rendering it, it can be overkill.

Though the details will vary, all the libraries and approaches here basically encourage you to abstract your data dependencies into container (or higher order) components in a way that lets external code determine when they have been resolved, while keeping your presentational components pure — a pattern that I think is extremely powerful.

Thanks to Ryan Florence (ryanflorence) and Mark Dalgleish (markdalgleish) for pointing me in the right direction; Dan Abramov (dan_abramov) and michael chan (chantastic) for really evangelizing the container/presentational component approach; and all the authors of the libraries mentioned above for giving your code to the world!

Component Style

I think styling is one of the most interesting parts of the frontend stack lately. In trying to clearly outline a set of opinions about web development recently, I wanted to know if there was a “right” way to style components? Or, at least, given all the innovation in other parts of the stack (React, Angular2, Flux, module bundlers, etc. etc.), were there better tools and techniques for styling web pages? Turns out, the answer is, depending on who you ask, a resounding yes and a resounding no! But after surveying the landscape, I was drawn to one new approach in particular, called CSS Modules. Certain people seem to get extremely angry about this stuff, so I’m going to spend some time trying to explain myself.

First, some background on the component style debate.

If you wanted to identify an inflection point in the recent development of CSS thinking, you’d probably pick Christopher Chedeau’s “CSS in JS” talk from NationJS in November, 2014. — Glen Maddern

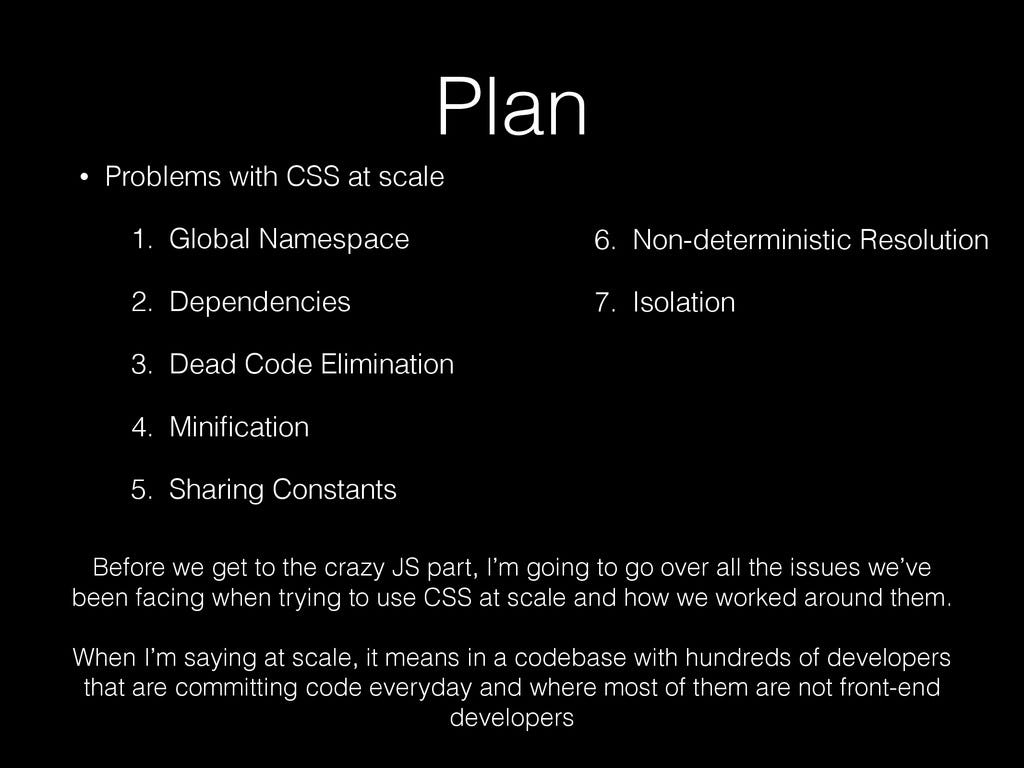

Chedeau’s presentation struck a nerve. He articulated 7 core problems with CSS, 5 of which required extensive tooling to solve, and 2 of which could not be solved with the language as it exists now. We’ve always known these things about CSS — the global namespace, the tendency towards bloat, the lack of any kind of dependency system — but as other parts of the frontend stack move forward, these problems have started to stand out. CSS is, as Mark Dalgleish points out, “a model clearly designed in the age of documents, now struggling to offer a sane working environment for today’s modern web applications.”

There has been a strong backlash to these ideas, and specifically to the solution proposed by Chedeau: inline styles in JavaScript. Some argue, that there’s nothing wrong with CSS, you’re just doing it wrong — learn to CSS, essentially.

If your stylesheets are well organized and written with best practices, there is no bi-directional dependency between them and the HTML. So we do not need to solve the same problem with our CSS that we had to solve with our markup. — Keith J. Grant

These critics also say there are more problems with the inline style approach than with properly written CSS. They point to naming schemes like OOCSS, SUIT, BEM, and SMACSS — developers love acronyms — as a completely sufficient solution to the global namespace problem. Others argue that these problems with CSS are specific to the type of codebase found at Facebook, “a codebase with hundreds of developers that are committing code everyday and where most of them are not front-end developers.”

The Modern Separation of Concerns

I think the real fight here is over separation of concerns, and what that actually means. We’ve fought for a long time, and for good reason, to define the concerns of a web page as:

- Content / Semantics / Markup

- Style / Presentation

- Behavior / Interaction

These concerns naturally map to the three languages of the browser: HTML, CSS, and JavaScript.

However, React has accelerated us down a path of collapsing Content and Behavior into one concern. Keith J. Grant explains this unified concern eloquently:

For far too long, we waved our hands and pretended we have a separation of concerns simply because our HTML is in one file and our JavaScript is in another. Not only did this avoid the problem, I think it actually made it worse, because we had to write more and more complicated code to try and abstract away this coupling.

This coupling is real, and it is unavoidable. We must bind event listeners to elements on the page. We must update elements on the page from our JavaScript. Our code must interact bidirectionally and in real-time with the elements of the DOM. If it doesn’t… then we just have static HTML. Think about it, can you just open up your HTML and change around class names or ids without breaking anything? Of course not. You have to pull up your scripts and see which of those you need to get a handle on various DOM nodes. Likewise, when you make changes to your JavaScript views, you inevitably need to make changes to the markup as well; add a class or id so you can target an element; wrap an extra div around a block so you can animate it a certain way. This is the very definition of tight coupling. You must have an intimate knowledge of both in order to safely make any substantive changes to either.

Instead, the mantra of React is to stop pretending the DOM and the JavaScript that controls it are separate concerns.

“Inliners” tend to argue that Style is also a part of that overarching concern, which can maybe be called Interface. The thinking is that component state is often tightly coupled to style when dealing with interaction. When you take input from the user and update the DOM in some way, you are almost always changing the appearance to reflect that input. For example, in a TodoList application, when the user clicks a Todo and marks it as completed, you must update the style in some way to indicate that that Todo has that completed state, whether it is grayed or crossed out. In this way, style can reflect state just as much as markup.

I think there is actually a second set of concerns being talked about here. This second set of concerns is more from the application architecture point of view, and it would be something like:

- Business Logic

- Data Management

- Presentation

On the web platform, the first two are handled by JavaScript, and a lot of the impulse towards inline styles is a move to get the whole presentation concern in one language too. There’s something nice about that idea.

The debate, though, is about whether or not style is a separate concern. This is, essentially, a debate over how tightly coupled style, markup, and interaction are:

Far too often, developers write their CSS and HTML together, with selectors that mimic the structure of the DOM. If one changes, then the other must change with it: a tight coupling.

…

If your stylesheets are well organized and written with best practices, there is no bi-directional dependency between them and the HTML. So we do not need to solve the same problem with our CSS that we had to solve with our markup.

~Keith J. Grant

Clear Fix

At this point, you should have a decent understanding of the different arguments over component style. I’m now going to outline my own opinions and highlight the approach that has emerged from them.

The foundational issue with CSS is fear of change

Recall the definition for tight coupling Grant offered:

You must have an intimate knowledge of both in order to safely make any substantive changes to either.

I think, ultimately, there are ways to organize and structure your CSS to minimize the coupling between markup and style. I think smart designers and developers have come up with approaches to styling — naming schemes, libraries like Atomic and Tachyons, and style guides — that empower them to make substantive changes to style and content in separation. And I respect the unease with the seemingly knee-jerk “Everything should be JavaScript!” reaction that seems to appear in every conversation lately.

That said, I like to minimize cognitive effort whenever possible. Things like naming strategies require a lot of discipline and “are hard to enforce and easy to break.” I have repeatedly seen that CSS codebases tend to grow and become unmanageable over time:

Smart people on great teams cede to the fact that they are afraid of their own CSS. You can’t just delete things as it’s so hard to know if it’s absolutely safe to do that. So, they don’t, they only add. I’ve seen a graph charting the size of production CSS over five years show that size grow steadily, despite the company’s focus on performance.

~ Chris Coyier

The biggest problem I see with styling is our inability to “make changes to our CSS with confidence that we’re not accidentally affecting elements elsewhere in the page.” Jonathan Snook, creator of the aforementioned SMACSS, recently highlighted this as the goal of component architecture:

This component-based approach is…trying to modularize an interface. Part of that modularization is the ability to isolate a component: to make it independent of the rest of the interface.

Making a component independent requires taking a chunk of HTML along with the CSS and JavaScript to make it work and hoping that other CSS and JavaScript don’t mess with what you built and that your component does not mess with other components.

I want to get rid of that fear of change that seems to inevitably emerge over time as a codebase matures.

JavaScript is not a substitute for CSS

There are a lot of very good arguments for sticking with CSS. It’s well-supported by browsers; it caches on the client; you can hire for it; many people know it really well; and it has things like media queries and hover states built in so you’re not re-inventing the wheel.

JavaScript is not going to replace CSS on the web — it is and will remain a niche option for a special use case. Adopting a styles-in-JavaScript solution limits the pool of potential contributors to your application. If you are working on an all-developer team on a project with massive scalability requirements (a.k.a. Facebook), it might make sense. But, in general, I am uncomfortable leaving CSS behind.

A Stylish Compromise

I’ve found CSS Modules to be an elegant solution that solves the problems I’ve seen with component styling while keeping the good parts of CSS. I think it brings just enough styling into JavaScript to address that fear of change, while allowing you to write familiar CSS the rest of the time. It does this by tightly coupling CSS classes to a component. Instead of keeping everything in CSS, e.g.

/* component.css */

.component-default {

padding: 5px;

}/* widget.css */

.widget-component-default {

color: 'black';

}// widget.js

<div className="component-default widget-component-default"></div>

or putting everything in JavaScript, e.g.

// widget.js

var styles = {

color: 'black',

padding: 5,

};<div style={styles}></div>it puts the CSS classes in JavaScript, e.g.

/* component.css */

.default {

padding: 5px;

}/* widget.css */

.default {

composes: default from 'component.css';

color: black;

}// widget.js

import classNames from 'widget.css';

<div className={classNames.default}></div>Moving CSS classes into JavaScript like this gets you a lot of nice things that might not be immediately apparent:

- You only include CSS classes that are actually used because your build system / module bundler can statically analyze your style dependencies.

- You don’t worry about the global namespace. The class “default” in the example above is turned into something guaranteed to be globally unique. It treats CSS files as isolated namespaces.

- You can still create re-usable CSS via the composition feature. Composition seems to be the preferred way to think about combining components, objects, and functions lately — it makes a lot of sense for combining styles too, and encourages modular but readable and maintainable CSS.

- You are more confident of exactly where given styles apply. With this approach, CSS classes are tightly coupled to components. By making CSS classes effortless to come up with, it discourages more roundabout selectors (e.g. .profile-list > ul ~ h1 or whatever). All of this makes it easier to search for and identify the places in your code that use a given set of styles.

- You are still just writing CSS most of the time! The only two unfamiliar things to CSS people are 1) the syntax for applying classes to a given element, which is pretty simple and intuitive, and 2) the composition feature, which will probably feel familiar to people who’ve been using a preprocessor like SASS anyway.

I’ve found that I can focus more on the specific problem I’m solving — styling a component — and I don’t have to worry about unrelated things like whether a CSS class conflicts with another one somewhere in another part of the app that I’m not thinking about right now. My CSS is effortlessly simple, readable, and concise.

CSS Modules is still a nascent technology, so you should probably exercise all the usual caution you would around things like that. For example, I found this behavior/bug to be particularly frustrating, and I expect I’ll run into more things like that. But I see them as rough edges on a very sound and promising model for styling components.

A lot of the most exciting stuff with CSS Modules directly relates to module bundling and the modern approach to builds — a topic for a future post perhaps.

I obviously read, linked, and quoted articles and talks by many other folks here. Thanks to Glen Maddern, Christopher Chedeau, Mark Dalgleish, Keith J. Grant, Michael Chan, Chris Coyier, Joey Baker, Jeremy Keith, Eric Elliott, Jonathan Snook, Harry Roberts, and everyone else who is thinking about this stuff in thoughtful and caring ways!

Learning to Code

Yes Yes Yes, to everything in this incredible piece my friend Anna wrote about programmer and writer Ellen Ullman: Hacking Technology’s Boys’ Club:

“I am dedicated to changing the clubhouse,” Ullman told me. “The way to do it, I think very strongly, is for the general public to learn to code.” It doesn’t have to be a vocation, she said. But everyone, she argued, should know the concepts—the ways programmers think. “They will understand that programs are written by people with particular values—those in the clubhouse—and, since programs are human products, the values inherent in code can be changed.” It’s coding as populism: Self-education as a way to shift power in an industry that is increasingly responsible for the infrastructure of everyday life.

“We really need to demystify all the algorithms that are around us,” Ullman told me as we walked down 2nd Street. “I keep hearing over and over, we want to change the world. Well: from what to what? Change it so everyone gets their dry-cleaning delivered?” Who will build the future we’ll live in? What will it look like? Right now, the important thing is that we still have a choice. “That’s why I’m advocating that everyone should learn to code at some level,” Ullman continued, “to bring in their cultural values, and their ideas of what a society needs, into this cloister.”

Many of my friends and acquaintances over the years have voiced a desire to learn to code and asked me for advice or thoughts on that path. I usually say, essentially, that “it’s not for everyone.” And then I try asking if the prospective coder has certain interests or predilections that might align with the real work of programming, the stuff you end up doing day-to-day. Did they enjoy figuring out why the VCR player or TV wasn’t working when they were growing up, for example? In asking these questions, I think that I’m trying to save the person from putting a lot of time into a skill that they might hate - like asking a person if they hate chopping onions when they’re pondering becoming a chef.

I also sometimes downplay the inherent value of learning to code. I loathe the idea in tech that code can solve all problems, or that tech will inevitably “disrupt” everything in our culture. And I worry about this narrative driving people to want to learn to code simply to stay relevant.

Ullman voices such an important and alternative view on this question. There is a narrative in the tech world that I think of as code mysticism - the idea that what programmers do is unknowable, indescribable, and unquantifiable to non-programmers. And that it can only be done by the chosen few - real hacker-y types. It’s not hard to imagine many reasons for this narrative to be perpetuated by those in power (e.g. engineers in Silicon Valley right now). And I think, as much as I hate the idea of code mysticism, when people ask me if they should learn programming, my typical response promotes that same kind of “Right Stuff” mythology.

As Anna writes, “Code, for all its elegance and power, is just a tool.” And learning the mental models and thought patterns employed by the users of that tool means you overcome that mystical protective barrier that the owners of that knowledge put up to keep out any influence from people unlike them.

For a more specific critique of the human values inherent in code right now, I would recommend Jaron Lanier’s, “You Are Not a Gadget: A Manifesto.” He argues that the current way of thinking about digital culture emerges from a philosophical foundation called cybernetic totalism, which essentially de-emphasizes people and humanity in favor of gadgets and computation.

But a simple example of the values of the programmer shaping our mental models in ways that have become assumed or implicit would be Facebook. Built by a white male programmer, Facebook basically models a person as a collection of likes. Even early on, you were asked to list your favorite movies and music and join groups organized around what you all liked.

There is nothing essential about this notion of personhood. You could model a person in many other ways - a set of experiences, dislikes, beliefs, physical traits, empathies, emotions, whatever. But this one white male programmer decided that he thought of people as the sum of what they liked, and now billions of people are heavily involved in a system that completely assumes this notion of personhood.

So, yes, if you’re at all interested in learning to code, you should do it. It’s like any other education or knowledge in that it empowers you to understand and influence more of the world. And, specifically, a world that is, as Anna writes, “increasingly responsible for the infrastructure of everyday life.” We need more perspectives and backgrounds and viewpoints influencing the models we make of the world and the technologies we produce from those models.

History

I have begun to see the aging man’s obsession with History in myself. It is only a matter of time until my shopping cart is packed to the brim with David McCullough tomes.

I took courses on a lot of things in college: Political Science, Computer Science, Music Theory, Philosophy, Literature, Film Theory, Cultural Theory, Accounting, foreign languages, Neuroscience, Architecture, Linguistics. Some were harder than others (Music Theory was probably the toughest course I ever took), but I always felt like I understood the basic idea of the discipline - what the field was about, its foundation. It was mostly a matter of putting in the time and effort to build up the details around that foundation.

But History was the one discipline that I never quite got.

This is one of the distinguishing marks of history as an academic discipline - the better you know a particular historical period, the harder it becomes to explain why things happened one way and not another.

…

In fact, the people who knew the period best - those alive at the time - were the most clueless of all. For the average Roman in Constantine’s time, the future was a fog. It is an iron rule of history that what looks inevitable in hindsight was far from obvious at the time. Today is no different. Are we out of the global economic crisis, or is the worst still to come? Will China continue growing until it becomes the leading superpower? Will the United States lose its hegemony? Is the upsurge of monotheistic fundamentalism the wave of the future or a local whirlpool of little long-term significance? Are we heading towards ecological disaster or technological paradise? There are good arguments to be made for all of these outcomes, but no way of knowing for sure. In a few decades, people will look back and think that the answers to all of these questions were obvious.

(from Yuval Noah Harari’s excellent book Sapiens: A Brief History of Humankind)

I increasingly see History as the most foundational of disciplines: trying to understand an outcome as a function of Matter moving in Space and Time. As an academic discipline, it defines the “Matter” as humans and culture. We try to understand how the humans living in the 1930s moved through Space and Time in such a way that a second World War happened. But, one can think of Biology as History where the “Matter” is a plant and the outcomes are things like the conversion of light energy to chemical energy.

History is an attractive field because it takes on that most essential question we have: Why?

The above passage made me wonder about all the interest in statistics and predictive analytics today. That conundrum at the top is worth pondering - that knowledge of a sequence of events decreases your ability to make strong causal statements about it, or at least to reduce the role of randomness in an explanation. I think that’s why History is also incredibly frustrating. When looking at a sufficiently complex (and therefore interesting) system (like human culture), can you actually arrive at any satisfying answers to that essential question?

Colophon

Note: This goes well beyond a traditional colophon into “Behind the Scenes” or “The Making Of” territory.

The reasons for redesigning one’s website are to a large degree the same as those for getting a haircut - putting one’s best (or at least a better) foot forward, aligning exterior with interior, etc. But in a redesign, there can also be value in the process itself. In that sense, it is closer to giving oneself a haircut (if that were advisable) - beneficial both in outcome and in performance.

This is an account of that process in excruciating detail. If you are not interested in design, fonts, layout, javascript, and/or web servers, and you have a tendency towards compulsively finishing things that you start, you may want to stop here.

~

The last version of my site (still available at //old.d-bow.com/) was designed in 2011 in a few hours in San Francisco with help from Adobe’s Fireworks and Ritual's wonderful coffee. I spent some time picking the color palette, designing the navigation, and incorporating a game. I did not spend time on the composition or presentation of the content. The design looked like an artless, clumsy subway poster.

I wanted my new site to focus on the content - the projects I’ve worked on, the things I’ve written, etc. And I wanted the design to get out of the way of that content. I wanted it to feel like an old book elegantly digitized.

Design

To achieve this, I focused on four particular aspects of web design: typography, color, navigation, and responsiveness.

Typography

The font was the one of the most important parts of the design since most of the content is type. I wanted a font that worked well for body copy (of which there would be quite a bit) but had a bit of quirkiness and vintage, like a weird antique. I went with Livory by HVD Fonts. I considered picking a second font for headlines, accents, etc. but decided against it in the name of simplicity (and I had no plans for elaborate navigational or decorative elements that would warrant a second font).

A moment for acknowledgements: I would never have ventured as far into the world of typography without the guidance of Meagan Fisher, who talked me through the whole process and gave me an invaluable reading list (within which the most valuable piece was this article by Jessica Hische (who, incidentally, did the illustrations for this incredible series from Penguin (a series on which, incidentally, my friend Henry worked (a circumstance that gave me the strong sense that, in choosing type, you should draw inspiration from the timelessness of classics and the warmth of friendship)))).

I should mention the drop caps and the ampersand: Meagan and Dan Cederholm wrote a blogpost many years ago on using the best ampersand, and she suggested that I use the italic Baskerville ampersand in the header. The drop caps in the “Insta-poems” section were also suggested by Meagan, and inspired by Jessica’s Daily Drop Cap project. They seemed like a nice touch given the literary, ornamental content. I spent a while sifting through the decorative fonts on Typekit before settling on Jason Walcott’s Buena Park. The “A” won me over.

Color

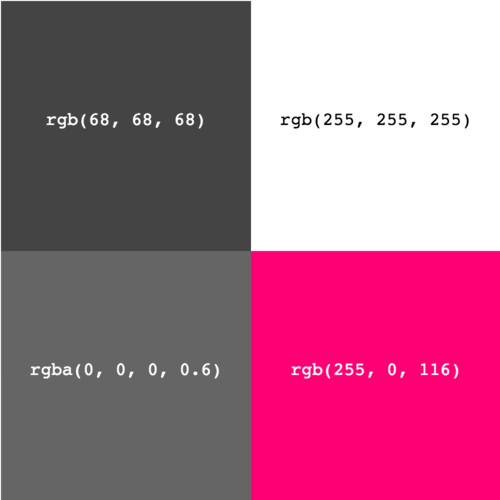

The palette that constitutes what I feel obligated to call “my style” tends to be fairly muted, earthy, and/or traditional, with a single surprising highlight - a small dissonance within comfortable harmony. My winter wardrobe, for example, involves a lot of unprovocative sweaters over just-visible brightly-colored t-shirts. I wanted the color palette for the site to seem very simple and comfortable: just a white background and medium-dark gray text. But to add that little highlight, the anchor tags would be a rich, saturated pink. And, finally, to bring out the headlines in the “Writing” section, there would be an almost-black gray.

The final color palette:

Navigation

I wanted to try something a little different with the navigation. The standard, time-honored approach is to implement a menu at the top or side of the content, with an organized collection of links that separate the different types of content. I tried a few of those designs but they felt strange in this context. That navigation makes sense for a publication or business, where the name and logo should be prominently displayed and you want to allow for clear navigation of very distinct site sections. But I wanted something a bit more personable and low key.

I ended up using a more “embedded” navigation - a sentence in the header that outlines who I am and all the things present on the site, but also serves as the way to navigate between those things. It’s essentially combining the navigation and site summary.

I like it a lot - it feels efficient and straightforward. It also makes the whole site feel more like a single piece of content than an over-engineered “interface.” However, I think it can be confusing and would probably not advocate for its use in anything but a portfolio website. It breaks from widespread convention on the web, which will most likely make it less clear to some users.

Responsiveness

For the first time, I wanted my website to totally shrink on phones. Since it’s largely text, it was very easy to constrain the content with a few media queries. The trickiest parts were updating my Tumblr layout (finding a way to include all of the extra navigational items in the minimal design) and handling the video background in the “Work” section. Video does not auto-play on phones (for good reason) and it took a little bit of trickery to ensure that mobile visitors see an alternate image instead of downloading the video. I think the last thing to do would be to blur the “Work” section images beforehand in Photoshop rather than blurring them in the browser with a CSS blur filter - it’s just extra work the browser doesn’t have to be doing (and it’s not supported in certain browsers).

Presentation

There were different challenges to presenting the content in each section of the site.

The “info” section was fairly straightforward - just a few paragraphs. But simplicity and brevity are perhaps the greatest challenges (as this post illustrates brutally).

For the “Work” section, I wanted to present the projects in a more visual way since I spend most of my time building the stuff you see in a website. I took screenshots of each project and used them as the background behind a name and a brief summary. The use of a semi-transparent background color to highlight the text in front of the images was inspired by Happy Cog’s work on visitphilly.com. A blur filter helps reduce any conflict between the text and the image (though, as mentioned above, this should be done to the underlying images). The embedding of a video from one of the projects is a dynamic highlight, though not without its costs - video makes the page a bit slower than it otherwise would be (and produces issues on mobile, outlined above).

In the “Writing” section, I wanted to present a subset of the things I’ve written over the years on my blog, but avoid conflicting “sources of truth.” I wanted the blog to remain the place for actually reading the posts, since it has useful functionality built-in (permalinks, commenting, tagging, etc.). So the “Writing” section pulls in a handful of posts and only shows roughly the first paragraph before blurring away and linking to the actual post on my blog.

Lastly, I added an “Insta-poem” section. It borders on pretense, but some of my posts on Instagram do occupy a fair bit of time and/or mental energy and I wanted a place to present them as a collection. The main challenge was deciding how much of the Instagram UI to include. I ended up creating my own simpler UI using just the image and caption, with a link to the actual post on Instagram. I wanted the site to serve as a collection of links to the actual source content, rather than a redundant location (the same reason for showing only part of the posts in the “Writing” section).

Implementation

The site was implemented with Angular and Google App Engine and the full source is here.

It uses very little of Angular’s considerable feature set - just routing, data-binding, and one API call to get the Tumblr data (posts and instapoems).

The single API call to get the Tumblr data could be moved to the server, with the response embedded in the rendered page. However, that could add a little latency to the site and I prefer to give the sense that it loads very quickly at the expense of possibly not having all the content immediately. Additionally, the content that loads dynamically is the least essential (the blogposts and instapoems, both of which can be seen on other reliable platforms).

App Engine was used to handle the game data on the old site, for which it was well-suited. In the current site, it does very little, apart from handling the Tumblr API calls and caching.

Adding a third party library to App Engine was quite tricky - probably because I’m a bit rusty with Python and there isn’t great documentation on it. After a lot of searching, I based my solution on this project, which involves a requirements.txt file to enumerate dependencies (to be installed via pip), a vendor.py file to add folders to the python path, and some code to add the ‘lib’ directory to the path in both appengine_config.py and main.py. It doesn’t feel elegant (and I’ve forgotten some of the details) but it works.

In building websites, you constantly face the choice of whether to use an existing solution or build one yourself. In fact, it’s one of the most essential parts of most software engineering. Choosing incorrectly can result in many hours spent in the most frustrating way possible. But I often feel that it’s similar to the moments in long drives where you choose between the interstate that you know might have a lot of traffic and the alternate route that might take you far out of your way (and also have traffic). You only think about the choice if you end up arriving far later than you hoped, and you immediately assume that the other option would have had a better outcome. But you never know - maybe there just wasn’t a way to drive through Washington D.C. in less than 3 hours that day.

I felt this way about the choice to use pytumblr to communicate with the Tumblr API. It seemed like the quick solution for a non-critical part of the application. Many people had spent many hours implementing a package that communicated with the API the correct way. There was no need for me to do it myself if I could just add that package and write a line of code and have what I needed. However, I spent a long time trying to figure out the third party library issue above, which made the choice seem ill-advised after the fact since I was making a single call to the API that I probably could have written manually.

The only other notable implementation detail is my use of Gulp to build the production version of the site. I spent some time learning about each module I used (in the past I’ve just blindly used the sequence of commands presented to me by a scaffold or another developer), and I commented on the commands in the gulpfile as a guide.

One particular program I used is Autoprefixer. It parses CSS and adds vendor prefixes only where necessary, based on current information from caniuse. I find that the majority of my usage of things like SASS and LESS involves mixins for vendor prefixes, nesting for organization (which I’ve come to believe creates more complexity than it eliminates, similar to how they feel at Medium), and variables for simpler maintenance of large codebases. So with Autoprefixer in place, I ended up just writing vanilla CSS.

I still think CSS preprocessors like LESS and SASS have value in larger applications, but I have been striving to avoid using a lot of the more advanced features to avoid the incidental complexity they create (hiding the true purpose of the code behind arbitrary language constructs in the name of organization or DRY-ness). Writing vanilla CSS felt simple and easy for a site like this.

~

I’m overall very pleased with how the site turned out, and even more pleased with all the things I learned along the way. Hopefully this writeup is useful to anyone thinking of doing something similar!

Kanye Zone, Digital Signatures, and the Nature of Reality

I. They Got In Our Zone

On March 10, 2012 the “greatest video game of all time,” Kanye Zone, hit the front page of Reddit and traffic went from 1s to 100s of thousands overnight. The game has a leaderboard of top scores where people who perform superlatively at keeping Kanye out of his Zone can write a short message and post their score. As the game rapidly became more popular, this leaderboard, intended as a celebratory and civil space for high achievers, disintegrated into a cesspool of increasingly offensive messages and clearly impossible scores meant to troll the growing userbase.

Probably everyone who has made a browser-based HTML5 game has run into the problem of some users “hacking” it to post “forged” high scores. I even saw it with my personal website game. I was fortunate that my site was of little interest to anyone beyond my friends to try to attack, but with Kanye Zone we faced a much larger pool of motivated attackers who found ways around every guard we put in place. We ended up having to limit the high scores to only the most recent hour to deter people from putting in the time to figure out how to post fraudulent scores. In other words we did not prevent attacks but rather made attacks less fun and/or rewarding.

II. Cryptography and Candy Bars

[Note: Throughout this post I am thinking exclusively about JavaScript/HTML5 programs running in the browser, not compiled programs running via plugins (e.g Flash)].

It’s generally accepted that there is no way to validate with 100% certainty any output from a JavaScript program running on a malicious client. But I’ve been wondering lately if that’s actually the case, and if so, why, exactly?

The methods to generate and submit fraudulent values are fairly straightforward. At its core, any program like this must keep track of some value, and at some significant time, generate a network request to pass that value along to the server. A malicious actor can either change the value (which will then be sent along by the program), or generate its own network request that passes along its own value.

The obvious solution would be to implement some sort of digital signature whereby the server can validate that a message it receives (e.g. a new high score) was sent by a program that was run until it actually reached that state (e.g. achieved that high score). A digital signature is supposed to provide two types of assurance to a recipient of a message:

- That the message was actually sent by the sender (Authentication)

- That the message was not altered on the way (Integrity).

[Another part of #1 is that the sender cannot deny having sent it (Non-Repudiation) but that is not pertinent to this]

An analogy:

You have a friend Veronica who wants to know how many candy bars to buy at the store. She’ll buy any amount written in a letter she receives as long as it’s from you. You want her to buy 20 Hershey’s bars, but you have some chocolate-addicted friends who want her to buy way more than that. You’re worried that they will try two things to get her to buy the extra bars:

- Send a letter requesting 100 Hershey’s bars in an envelope with your return address.

- Wait at the mailbox for the letter you actually send, open it up, change the “20” to “100”, re-seal it, and put it back in the mailbox.

You need to provide Authentication to prevent their first attack, so Veronica can validate that a letter supposedly from you is actually from you, and Integrity to prevent the second attack, so she can determine that the letter she receives has not been modified in the meantime by someone else.

A simple signature in this analogy would be a scheme where every character in the letter has a different numeric value, and you simply send along the sum of all those values with your message.

As an example, say this was a part of the scheme:

character value

0 3

1 10

2 89

3 2

To request 20 Hershey’s bars, the digital signature you’d send along would be 92 (89 + 3). You and Veronica would agree on the scheme beforehand, and only the two of you would know what each character was worth. In other words, it would be a secret you both shared. So when she received your letter with “20”, she could perform the conversion herself to make sure that it matches the 92 you sent along as the signature. Now someone else could not send a letter pretending to be you because they would not know how to generate that signature from the message. And they cannot intercept your letter and change it because then the message Veronica receives would not match the original signature you provided. Through this scheme we have achieved Authentication and Integrity and she can be confident that the letter she receives is both actually from you and has not been changed. She can buy those 20 Hershey’s bars with confidence!

III. A World Without Secrets

The problem with the conventional approach to digital signatures in the context of a client-side program is that you have to assume the entire client is compromised. A user loading your program can execute any code in any execution context, so there’s not an obvious way to keep a secret.

Digital signatures depend on some kind of shared secret. So the first thing to think about is if there is any place at all in a web page where a server can place a secret that only one specific program has access to. But as far as I can tell browsers do not currently offer this kind of security to a specific program or execution context:

- All network traffic can be observed.

- Closures (language-level privacy) can be accessed.

- Same-origin policy can be bypassed.

In a world where there are no secrets, it is seemingly impossible to provide Authentication. No actor in that world has anything that is not accessible, reproducible or mutable by other actors. How would you identify yourself in a world where your social security number, appearance, even your DNA were readable and reproducible by other people? If another person existed who knew everything you knew, looked exactly like you, and had your DNA, you could not authenticate that you were the “real” you - at that moment you and the other person are identical from the point of view of any third party. In such a world, poor Veronica has no way of knowing whether one of your chocolate addict friends just took the knowledge of the numeric scheme from you and is now sending messages with your signature. In fact, they could just change your thoughts so that you actually wanted “100” Hershey’s bars.

IV. State Change-Based Messaging

What if we were to merge “Identity” and the message into a signature that is a combination of starting state and modification? Let’s say that Veronica changes her approach and decides to send you a math test, saying that for each problem solved, she’ll buy a Hershey’s bar. There would be no way for the chocolate addicts to trick her into getting more Hershey’s bars just by sending a request or intercepting the test and changing a few numbers. They could not fake it without solving the problems, and if they solve the problems then they aren’t faking it. In other words, she doesn’t care about the “realness” of the person or what they say, just that they provide something that strongly implies they have gone through the things she specifies - if they have, they are “real” or “valid.” She can’t verify that you specifically solved the problems, but she can verify that the work was done. Indeed she no longer really cares whether it’s you - her ambitions have widened and she wants to reward anyone who has solved those math problems. Identity is no longer possession of a secret, but rather evidence of having done work. The message is no longer protected by the secret, but rather contained in the work itself. The secret is the difference between the starting state (unsolved math problems) and the ending state after work (solved math problems).

Let’s return to the browser. If we cannot keep anything about our program secret to provide Authentication of its starting state (i.e. identifying the program that has our secret key), maybe we can ensure that the program end state cannot be faked (i.e. identifying the program that started this way and then did these things)? Assuming the program consists of state and functions that modify that state, we want to somehow tie the state to the sequence of function calls. For example, say our program has a variable called total that starts at 0 and a method called score that increments the total. A malicious client could just set the variable to 1000 and we would have no way of knowing that it is not equivalent to 1000 calls to score. But what if, for example, the total variable was set to a random seed from the server, and the score method hashed that seed repeatedly. There would be no way to “fake” 1000 calls to score, since there would be no way to predict what the total variable would be after 1000 calls to score except by actually performing those calls. This would provide a digital signature of sorts, where the program could send along “1000” and then the resulting hash, and the server could hash the original input 1000 times to see if it matches. If it does, it knows that the program state was actually modified 1000 times. It is a digital signature that provides Authentication and Integrity of program state changes. The message is basically “I started this way and then underwent these changes.” The secret in this instance is much more abstract. Rather than a thing that lets you generate an output that cannot be reversed to the input state (like our numeric scheme with Veronica), we have an input that cannot be converted to its output without going through the actual conversion (like solving math problems). The secret is in the conversion.

At this point we would be able to confirm that a program had started from a certain point and then gone through a sequence of specific modifications. However, a program usually has certain logical statements that determine when modifications take place. What if you wanted to confirm that those logical statements had been obeyed? As an example, say you had two squares in the program being drawn at random places on the screen and you only wanted to call the score method when those squares overlapped. How would you confirm that that program logic had been observed and the squares had overlapped 1000 times, rather than a malicious actor simply calling the score method 1000 times? Perhaps you could validate that a function that checks whether they overlap was being called X times. You could also validate the result sequence of that function by storing the result in a bitmap and sending that along with the message. And you could factor the state of the overlap function into the update to the score state each time. So if overlap is true, the score is updated one way, false a different way. With all of that in place, the server could recreate that sequence-dependent state change to validate that the result actually reflected that sequence of logical states.

V. Psychophysics

But because a client has read/write access to every execution context, we will never be able to trust that the environment or the variables and state external to the program have not been tampered with. Basically, you would have no way of knowing whether the two squares were being manipulated somehow.

In our analogy, Veronica is trying to authenticate that you know the answers to math problems to know how many Hershey’s bars to get. From your response, she can conclude that you found the answers to some number of math problems. Unfortunately, that is the only thing she can conclude. She cannot determine if you had a calculator, or if you asked another person for help, or if you actually understood the answers, or if another being momentarily inhabited your body and forced you to complete the test. We would have no way of detecting changes to the environment, inputs to the program, or things the program is supposed to measure external to it.

VI. “Woah”

When you attempt to detect artificial inputs to a program, you quickly move from security and architecture questions to more philosophical musings: What is reality? How do we know that what we see is real? What does it mean to be “human?” How do you detect “human-ness?” etc. The output of a malicious program that affects the environment / external variables (moves a mouse or moves DOM elements or mocks out parts of the environment) would have to be detected as non-human, as the work of a non-human intelligence. And we currently do not really have a good way of even understanding or conceptualizing stuff like this, let alone reliably detecting or discerning it (see Jeff Hawkins’ On Intelligence, or Brian Christian’s The Most Human Human, as two examples of great books on the topic).

So I believe this is about the best we can do to provide client-side program digital signatures. We can send out a program with a starting state, tie its state to its methods for state modification, and then confirm that a specific sequence of modifications occurred. And we can analyze the sequence to attempt to validate that it seems “legitimate” to the best of our ability, but we cannot be 100% sure that the program was being run in any specific environment. Client-side programs are a little like the people in The Matrix - they receive inputs and react to them and they can confirm that things make sense given the sequence of inputs and what they know about the world. But they have no way of knowing whether the inputs come from reality or from weird human energy harvesting pods built by machines.

VII. Some Kind Of Conclusion That Is Really Just Ending Up Where We Started But With Maybe A Newfound Understanding Of Stuff

While this feels like giving up and admitting that we can never be sure of anything (in client-side programs or in Life), this is actually a totally acceptable state of affairs. Cryptography does not ever promise 100% security and certainty. Cryptography operates in the realm of probability, a realm wholly alien to the way the brain normally perceives the world. In the original analogy for a digital signature, even if everything goes perfectly and I send Veronica the letter with my digital signature and she validates it, she can never be 100% certain that a malicious actor didn’t just guess and get it right. It’s an outcome with an incredibly small probability if your security/cryptography is designed correctly, but it is still possible.

The act of securing any system is adjusting the slider on that scale between effort and reward. You do just enough to make tampering with the system unappealing and/or unlikely to work, given the level of interest in the outcomes associated with tampering. If you were to implement a full-fledged state-modification validation scheme as I outlined for a program running in a malicious client, you would be raising the bar to tampering. A malicious actor could not easily just send fraudulent values anymore, they would have to construct a way to recreate your program’s states or interface with your program. And hopefully that’s not appealing. But if a thing is appealing enough to tamper with, malicious actors can always rise up and surpass the bar. Online poker has seen this issue with increasingly sophisticated bots created to interface with the program. Taken even further, you can imagine a scenario where the rewards of simulating a user interacting with a program are so great that a person designs a robot to sit at a desk and detect visual changes on the monitor to know how to move the mouse. The program then has the daunting task of discerning computer input from human input solely on the basis of the nature of the input. At this point, security becomes a Turing test and there is no perfect solution.

So I guess, in the end, design a sufficient level of security given the value of tampering with your program, lower the value of tampering to the best of your ability, and rest easy knowing that probably nobody would ever invest the time and money and effort to build a robot to operate your program because it’s just a silly game about Kanye West.

[Thanks to Kyle for thoughts/input on this]

SMiLE

The myth of The Beach Boys’ SMiLE first took form in my head driving from Marin to San Francisco with my friend Matt a year or two ago. We were discussing, I don’t know, the 60s and music? I was into the Beach Boys, having re-discovered them through the eyes of the Elephant 6 collective. I was less into the Beatles but still curious. I learned of the great pop music rivalry that yielded Rubber Soul (Dec ‘65) > Pet Sounds (May '66) > Revolver (August '66) > Good Vibrations [single] (October '66), and finally Sgt. Pepper’s Lonely Hearts Club Band (June '67). How each band’s albums had been one-ups of the other’s, Pet Sounds setting the bar dominantly, only to be surpassed by Sgt. Pepper’s, which remains the pinnacle of a certain kind of upper echelon 60s pop in the collective imagination.

But there was supposed to be SMiLE, Brian Wilson’s unfinished work of probable genius that became more legendary, sublime, difficult and masterful the more its too-close-to-the-sun story of ambition and collapse took hold. I learned how Wilson had viewed SMiLE as a project that would eclipse Pet Sounds as much as Pet Sounds had eclipsed everything before it, becoming the ultimate pop masterpiece. And how hearing Sgt. Pepper’s gave him a nervous breakdown as he realized the Beatles had leapfrogged him, adding crippling and fatal stress to the SMiLE project.

We listened to a version of it I had (one of the bootleg attempts at recreating what it may have been) in the car and I remember approaching it with genuine openness. But I didn’t really appreciate it or feel like I could get much out of it at the time. I’ve been re-visiting it over the last few days and it has rewarded the time and attention. I’ve also benefited from the release of The SMiLE Sessions in 2011, which provides a fairly definitive release that I like more than the bootleg I had.

Some things I’ve read, gathered, and thought a lot about:

It was to be a “teenage symphony to God” and a "monument.“

It is about America, origin to present day, coast to coast and beyond, myth and reality.

Wilson and Parks intended Smile to be explicitly American in style and subject, a reaction to the overwhelming British dominance of popular music at the time.[4][9][nb 3] It was supposedly conceived as a musical journey across America from east to west, beginning at Plymouth Rock and ending in Hawaii, traversing some of the great themes of modern American history and culture including the impact of white settlement on native Americans, the influence of the Spanish, the Wild West and the opening up of the country by railroad and highway. Specific historical events touched upon range from Manifest destiny, American imperialism, westward expansion, the Great Chicago Fire, and the Industrial Revolution. Aside from focusing on American cultural heritage, Smile’s themes include scattered references to parenthood and childhood, physical fitness, world history, poetry, and Wilson’s own life experiences. [source]

During this period, Wilson and Parks were working on an enormous canvas. They were using words and music to tell a story of America. If the early-60s Beach Boys were about California, that place where the continent ends and dreams are born, SMiLE is about how those dreams were first conceived. Moving west from Plymouth Rock, we view cornfields and farmland and the Chicago fire and jagged mountains, the Grand Cooley Damn, the California coast– and we don’t stop until we hit Hawaii. Cowboy songs, cartoon Native American chants, barroom rags, jazzy interludes, rock'n'roll, sweeping classical touches, street-corner doo-wop, and town square barbershop quartet are swirled together into an ever-shifting technicolor dream. [source]

It is a profoundly psychedelic album, but with a darker focus. Where the Beatles seemed to view psychedelics as a liberating path to insight and love, or something, SMiLE fixates on the mental break inherent in the experience:

Amid the outtakes – stuff even the most dedicated bootleg collector won’t have heard – there’s a telling moment. "You guys feeling any acid yet?” asks Wilson during a session for Our Prayer. He sounds terrified. LSD didn’t make Wilson relax and float downstream: it scared the shit out of him, lighting the touch paper on a whole range of mental health problems. And he couldn’t keep the fear out of his music. Even at its most remorselessly upbeat, the Beach Boys’ music was marked by an ineffable sadness – you can hear it in the cascading tune played by the woodwind during Good Vibrations’s verses – but on Smile, the sadness turned into something far weirder. All the talk of Wilson writing teenage symphonies to God – and indeed the sheer sumptuousness of the end results – tends to obscure what a thoroughly eerie album Smile is. Until LSD’s psychological wreckage began washing up in rock via Skip Spence’s Oar and Syd Barrett’s The Madcap Laughs, artists tactfully ignored the dark side of the psychedelic experience. But it’s there on Smile: not just in the alternately frantic and grinding mayhem of the instrumental Fire, but in Wind Chimes’s isolated, small-hours creepiness and the astounding assembly of weird, dislocated voices on I Love to Say Da Da.

Isolated, dislocated, scared: Smile often sounds like the work of a very lonely man. There’s not much in the way of company when you’re way ahead of everyone else. Whether anyone would have chosen to join Wilson out there in 1967 seems questionable: perhaps they’d have stuck with the less complicated pleasures of All You Need Is Love after all. What’s beyond doubt is the quality of the music he made. [source]

My favorite line from that review is “It was a psychedelic experience Hendrix wouldn’t understand, but Don Draper might.” I tend to agree with that review’s conclusion that even if it had been released in 1967, it would probably not have Changed Music History so much as been the book-end to a particular pop music trajectory, the ultimate square psychedelic pop album that maybe went a bit too far. Perhaps a “normal” American mind warped by psychedelics is just more disturbing than a mind that already lives on the outskirts?

Wilson had gone a tad off the deep end. Mary Jane had long been a friend, but Wilson had grown increasingly close with Lucy (we mean acid, folks). This both allowed for the extreme insights he had during recording and began to dissemble the already overworked and paranoid Wilson. He became convinced the “Fire” section of ‘Elements Suite’ was responsible for local fires, ceasing recording of the material, had his band dress in firemen hats, and so on. [source]

There are some profoundly beautiful moments, and some profoundly eerie and disturbing moments. “Our Prayer” is beautiful. “Do you like worms” is deeply eerie, especially the harpsichord part. I really love “Wind Chimes” and “Surf’s Up.” “Heroes and Villains” had/has “Good Vibrations”-style musical ambitions and was supposed to be the thematic centerpiece, but it falls short for me in a way I can’t quite put my finger on.

The story/legend/myth of SMiLE certainly adds a lot to the experience of it. And the musical/lyrical experience alone is every bit as demanding and layered and ambitious as legend had it. But I think we wouldn’t have been quite as willing to live in and explore that warped pop drug vision of America as we maybe assumed.

JS Drama Reading List or: How I Learned to Stop Worrying and Love the Semicolon

A couple months ago, there was a lot of drama in the javascript world. I thought the anger and intensity of the debate was really silly, but I think it did do a good job of prompting a lot of frontend engineers (me included) to revisit and brush up on automatic semicolon insertion (which was a good thing). I finally got around to reading all the things on it in my todo list, and wanted to share some of the best:

- Probably the most reasonable discussion of the actual rules and background.

- A really inflammatory but sort of compelling plea to at least understand the rules and facts before making a decision.

- A blogpost by one of the two people who started the whole drama, explaining his decision to not use semicolons.

- A reasonable summary/reaction by the inventor of JS.

My ultimate opinion was something along the lines of what Brendan Eich said:

“The moral of this story: ASI is (formally speaking) a syntactic error correction procedure. If you start to code as if it were a universal significant-newline rule, you will get into trouble.”

I agree that it’s important to understand this part of the language. But I personally would not think of it as a “feature” but rather as a “safeguard,” and treat it as such. Also, I always err on the side of obviousness and simplicity, rather than minimalism and trickiness. If I want to end a statement, I want to explicitly end it with the semicolon. This feels less error-prone for me as the writer, AND easier for people reading my code to know my intention. I think the readability aspect was downplayed by the people arguing for not using semicolons. I find the Bootstrap code tougher to read because I’m not used to semicolon-less code. This issue is sort of hilariously brought up here. But in that group discussion, I think they still ignore the readability issues and the benefits of having a common style in the community (the discussion really only points to a sort of territoriality as justification for adopting a given style).

The whole argument sort of felt like ASI is a minefield, and there are some people who know how to avoid the mines and can go straight across with minimal risk; but there are others who don’t know how to spot the mines that well, and instead opt for a slightly longer route that everyone tells them to take because it’s safer. I don’t think that’s necessarily an irresponsible decision (not to learn to spot the mines when there is a route that bypasses them). But, again, the best thing to come out of this was probably alerting a lot of developers that there is a way to spot those mines and it’s probably worth learning it, independent of which style you want to use.

Paying for music: my 2 cents (pun intended)

Credibility-establishing Editorial Note: I have paid for all my music in at least the last 5 years. I relish my trips to my favorite local record store to (gasp) buy CDs, and I use several music services that are “legitimate” (like Spotify, Turntable, Pandora, and iTunes).

Like everyone else on the Internet lately, I read this blog post arguing in favor of people paying for music recordings to support artists. But rather than argue against any of the specific points (a temptation very difficult to resist, especially when he seemingly tries to argue that my CD burning activity in high school was to blame for several suicides), I want to think more about the fundamental question: “Why don’t people want to pay for recorded music?”

In a lot of these anti-piracy arguments, there’s the assumption that artists are entitled to monetary compensation for their recordings. I believe this assumption is now false, and that’s the real reason for the changes in how people acquire and consume recorded music.

The assumption that artists should be compensated with money for recording music is probably based on economic and technological factors in the 20th century, when the means to produce and distribute quality music were limited to “professionals.” Recording equipment required experience and expertise to produce anything listenable. Additionally, getting recordings into the hands of a large audience required a lot of infrastructure - creating the physical albums, shipping them, marketing them, etc.. When the production and distribution of music had such barriers to entry, recorded music had economic value and people were willing to pay for the music that made it through those barriers. Musicians were operating in an industry, producing something that only a limited set of people had the means to produce.

So what is “economic value?” I’m using it to mean the perceived monetary exchange rate for a given good or service. And it is usually predicated on that good or service not being widely accessible - basic supply and demand. This has always been the case. If I were a cabinetmaker, I would never pay for someone else to make me a cabinet because it’s a service directly accessible to me - I would have the requisite expertise, means, and desire to perform the task such that the economic value of someone else doing it would be well below the amount I would pay. But other people are farmers or lawyers or plumbers, and they do not have the expertise or means to make cabinets. So they would pay me to produce cabinets. Because the supply of cabinets is low, the supply of cabinet-making skills is low, and the demand for cabinets is high, those cabinets and skills have more economic value.

Have you used music production software lately? It’s really easy to make a professional sounding recording. And once you do, it’s super easy to post the file online and then anyone in the world with an internet connection can hear it. In my mind, music production no longer has the barriers to entry that give it economic value. Amateurs are often making music that I like more than “professional” recordings. Heck, even I can make music that I like listening to more than a lot of “professional” recordings. In my view, recording music no longer carries economic value, so I generally don’t feel the need to compensate musicians with money for their recordings.

If this seems cruel or arrogant or ridiculous, consider this passage from This is Your Brain on Music: The Science of a Human Obsession (bolding added by me):